AI

Llama Guard: What It Actually Does (And Doesn't Do)

Llama Guard isn't a firewall. It's not antivirus for your prompts. And if you're treating it like either, you're probably leaving gaps in your AI security.

AI

Llama Guard isn't a firewall. It's not antivirus for your prompts. And if you're treating it like either, you're probably leaving gaps in your AI security.

Cybersecurity

Bing Chat. ChatGPT plugins. Hundreds of production apps. Same vulnerability: no separation between system instructions and user input. If you're concatenating prompts, you're vulnerable.

LLMs

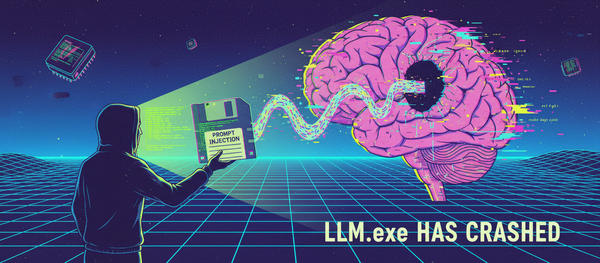

Wanna learn how to hack an AI? Now is your chance! I'm going to show you three prompt injection attacks that work on ChatGPT, Claude, and most other LLMs. You can test these yourself in the next five minutes. No coding required. Also...you didn't 'hear' this from me...

LLMs

Prompt injection is the #1 security threat facing AI systems today and there's no clear path to fixing it. This vulnerability exploits a fundamental limitation: LLMs can't distinguish between trusted instructions and malicious user input. Understanding prompt injection isn't optional—it's critical.

Machine Learning

If you've been paying attention to the AI space lately, you've probably heard about the Model Context Protocol, or MCP. Released by Anthropic in November 2024, it's being hailed as a game-changer for AI integrations—and honestly, it kind of is. It's

Machine Learning

You've probably heard AI is taking over the world - but here's the dirty little secret: most AI models are shockingly fragile. I'm talking 'one pixel change breaks everything' fragile. Today we'll cover what AI actually is, how machine learning

Machine Learning

The title about says it all, doesn't it? LLMs are a lot dumber than most folks seem to realize, and today, we're going to blow those vulnerabilities open. Let's get into it. LLM Basics (And why they aren't as smart as you