LLMs

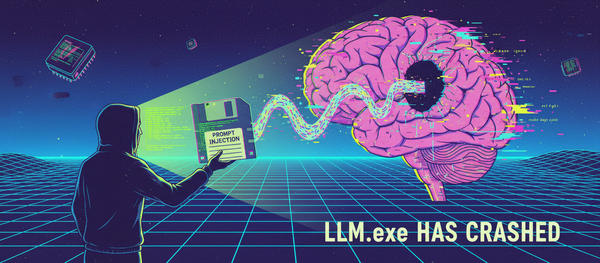

Prompt Injection: The Unfixable Vulnerability Breaking AI Systems

Prompt injection is the #1 security threat facing AI systems today and there's no clear path to fixing it. This vulnerability exploits a fundamental limitation: LLMs can't distinguish between trusted instructions and malicious user input. Understanding prompt injection isn't optional—it's critical.